New AI adds anything to videos, new open-source AI agents, ultra-realistic AI videos, full camera control, Deep Research, Gemini 2.0 Pro

Welcome to the AI Search newsletter. Here are the top highlights in AI this week.

OpenAI introduced "Deep Research," a new ChatGPT feature designed for extensive research tasks. It uses the upcoming o3 reasoning model to conduct multi-step research on the internet for complex tasks. The tool is tailored for knowledge-based professions but can assist with any thorough research requirement. Read more

Do you prefer to watch instead of read? Check out this video covering all the highlights in AI this week:

VideoJAM is a new AI framework that improves the motion generation capabilities of video models. It does this by learning a joint appearance-motion representation, which allows the model to better capture the dynamics of real-world motion. This results in more realistic and coherent video generations. Read more

DiffVSR is a new AI framework for video super-resolution, which is the process of enhancing the quality of a low-resolution video. It uses a diffusion-based approach to effectively address the challenges of maintaining both high fidelity and temporal consistency. This results in more stable and high-quality video super-resolution results. Read more

uPix is an AI Selfie Generator that allows users to turn into anyone in just one click. Select from a vast array of templates, ranging from superheroes to business portraits, and even anime characters. Try it out today!

Google has released Gemini 2.0, its latest and most advanced AI model suite. The suite includes Gemini 2.0 Flash for high-volume tasks, 2.0 Pro Experimental for coding, and 2.0 Flash-Lite as the most affordable option. This release represents Google's commitment to developing AI agents capable of executing complex multistep tasks autonomously. Read more

MatAnyone is a new AI framework for video matting, which is the process of separating a person or object from the background in a video. It uses a memory-based approach to track the object across frames and maintain a consistent matte. This allows for more accurate and stable video matting results. Read more

OmniHuman Lab has developed an advanced AI system, but details about its specific capabilities are not provided in the search results. As the information is limited, it's recommended to visit their website for more accurate and up-to-date information about their work. Read more

Hugging Face researchers created "Open Deep-Research," an open-source alternative to OpenAI's "Deep Research" in just 24 hours. The AI agent can autonomously browse the web and generate research reports. Read more

DynVFX is a new AI framework for augmenting real-world videos with dynamic content. It uses a zero-shot, training-free approach to synthesize dynamic objects or complex scene effects that naturally interact with the existing scene. This allows for more realistic and cohesive video augmentations. Read more

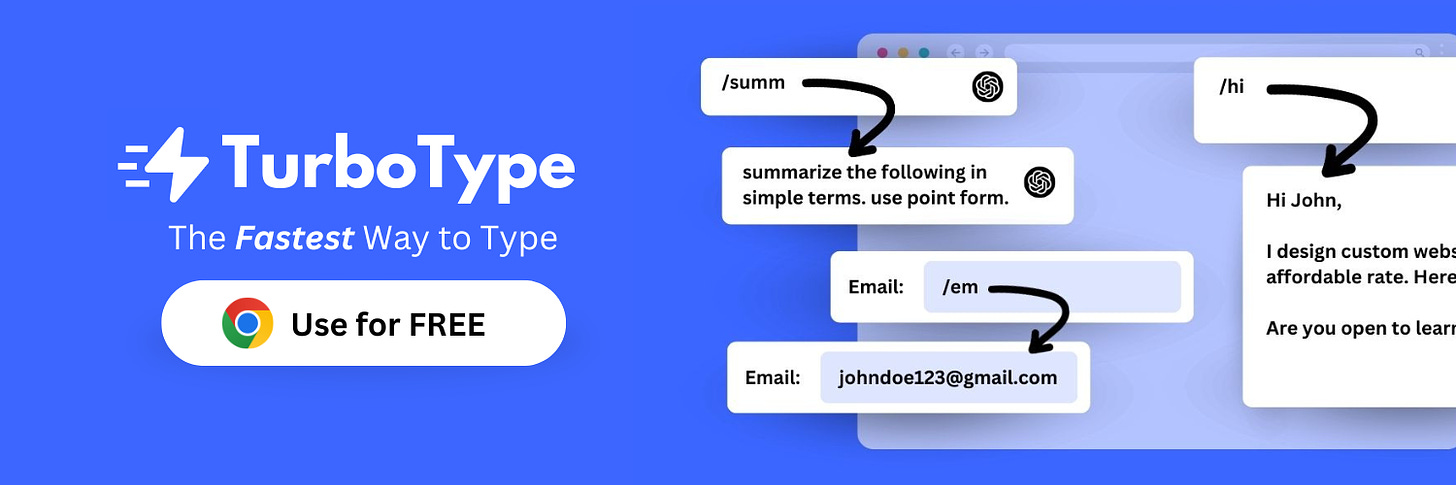

Turbotype is a Chrome extension that allows you to type faster by setting customizable shortcuts, designed to boost productivity and save time. Easily create, save, and use keyboard shortcuts for frequently used phrases. It’s free forever - try it out today!

Pika has launched a new feature called Pikadditions for video editing. This tool allows users to add any object or character from a reference image to any video. It represents an advancement in AI-powered video manipulation technology. Read more

Mistral has released iOS and Android apps for its AI assistant 'Le Chat', along with new features and pricing tiers. Le Chat can answer queries, perform web searches, generate images, and execute code. Read more

Researchers have developed a fully open-source agent called ReasonerAgent that can answer user queries by doing research in a web browser interface. The agent can perform tasks such as searching for flights, compiling online shopping options, and researching news coverage. Read more

A new method called MotionCanvas has been developed for cinematic shot design with controllable image-to-video generation. MotionCanvas integrates user-driven controls into image-to-video generation models, allowing users to control both object and camera motions in a scene-aware manner. Read more

Researchers have developed a method to train an AI reasoning model for less than $50. This was achieved by using a distillation process to extract capabilities from an existing AI model and incorporating a "thinking" step to enhance accuracy. Read more

Researchers have developed a new type of artificial neural network (ANN) inspired by biological dendrites. This innovative design allows for accurate and robust image recognition while using significantly fewer parameters, paving the way for more compact and energy-efficient AI systems. Read more